Facial Coding

Facial coding is the process of interpreting human emotions through facial expressions. Facial expressions are captured using a web camera and decoded into their respective emotions.

Decode helps to capture the emotions of any respondent when they are exposed to any media or product. Their expressions are captured using a web camera. Facial movements, such as changes in the position of eyebrows, jawline, mouth, cheeks, etc., are identified. The system can track even minute movements of facial muscles and give data about emotions such as happiness, sadness, surprise, anger, etc.

Experience Facial Coding ✨

To experience how Facial Recognition works, click here: https://www.entropik.io/experience/facial-coding

Once you are on the website, start Facial Coding by playing the media on the webpage. Ensure your face needs to be in the outline provided next to the media.

Please enable your web camera while accessing the application.

Insights from Facial Coding 👩🏻💻

Now one would wonder what we do with this data. Facial coding results provide insight into viewers’ spontaneous, unfiltered reactions to visual content by recording and automatically analyzing facial expressions during the process. The information is collected in real-time and gives cognitive metrics of the user’s genuine emotions while experiencing/viewing the content. It depicts the user’s emotions through facial movement.

The results are made available to users in the Result Dashboards.

You will get the following metrics from facial coding:

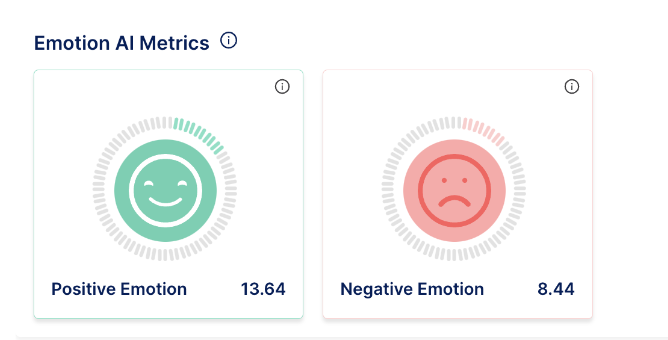

Emotion AI metrics

Emotion response refers to the collection and analysis of data related to the emotional reactions of respondents to various stimuli, such as advertisements, products, or brand experiences.

- Positive Emotions: the platform uses a combination of two emotions - Happy and Surprise - to determine whether the user felt happy while watching a particular video.

- Negative Emotions: the platform is using a combination of three emotions - Anger, Disgust, and Contempt - to determine whether the user felt sad while watching a particular video.

Note: Fear and sadness are not included in negative emotions, as these emotions need to be considered in the context of the media and can be shown as standalone emotions.

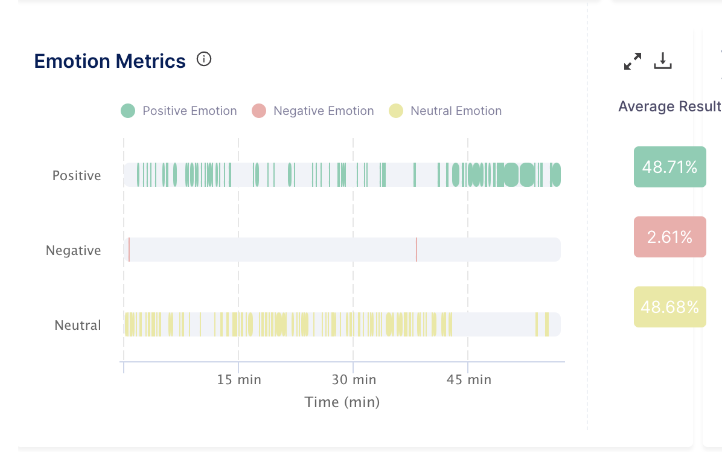

To provide a holistic view of emotional responses, the platform utilizes emotion metrics that incorporate voice, facial, and text sentiment analytics. By merging these three sources of data, the platform captures and analyzes positive, negative, and neutral emotions exhibited throughout a discussion or media content. This consolidation of data offers a more comprehensive and simplified overview of the overall emotion displayed.